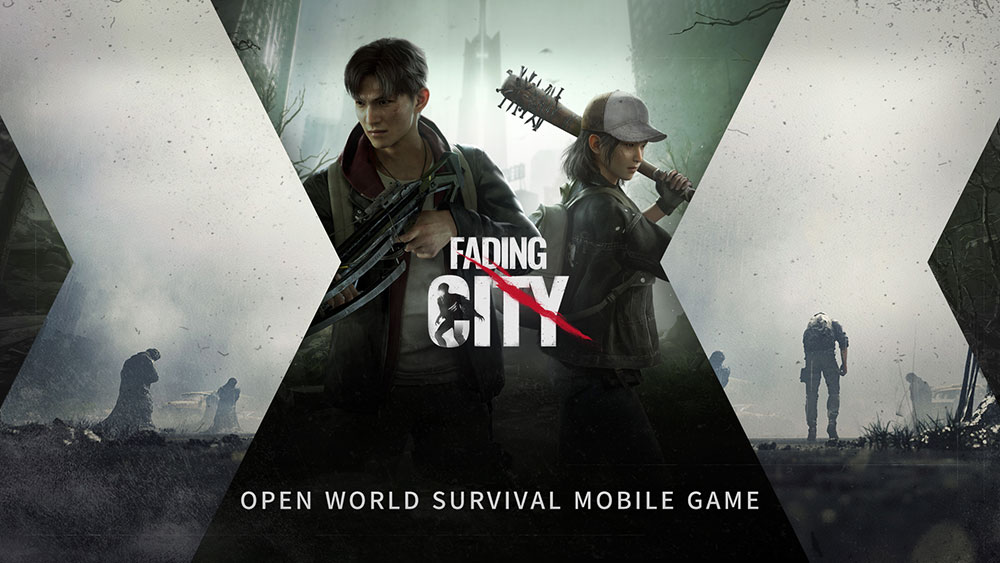

A sudden mist shrouded the city named Weidu. Under the black fog, people struggled to survive, but still could not avoid the ubiquitous blue particles. Above the ruins, the former Tower of Hope shimmered, but still did not illuminate the future of the city. The order no longer exists, and life gradually comes to an end. But the future can change, and everything is not a foregone conclusion. Human beings will meet again at the origin and make a new hope.